As artificial intelligence (AI) becomes ubiquitous, familiarity with its growing number of subsets — machine learning, robotics, chatbots, neural networking, and more — has also expanded. One of AI’s most recent iterations, emotion AI or artificial emotional intelligence, is finding footing in a number of industries as a way to gain insights from human behavioral patterns, allowing them to build predictive models for better decision making.

Defining Emotion AI

We know that one of the main things that differentiate artificial intelligence and humans is the fact that the AI doesn’t have emotions. The inability to adapt to particular situations and to adjust its processes along with certain environmental changes used to be another one of AI’s limitations, too. But through new technologies like machine learning, AI can be cognitive and learn through experience, as well as predict and proactively achieve certain outcomes.

So, if machines can replicate cognition, can they also replicate the way we feel? The answer is yes, and it is emotion AI that makes this possible.

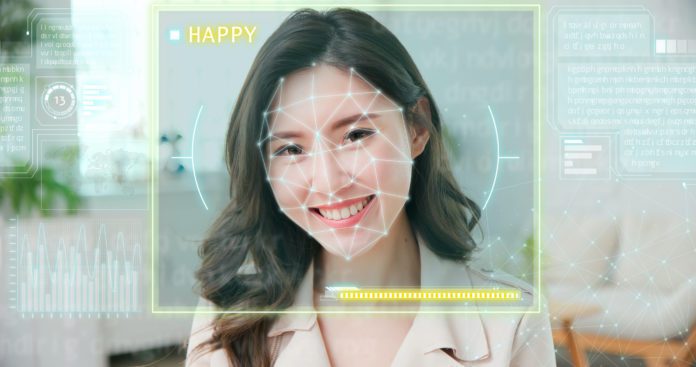

Emotion AI uses artificial intelligence to detect human emotion and learns how to interpret and appropriately respond to both verbal and non-verbal signals. It also understands the emotive channels of human communication, which enables machines with some level of emotional intelligence to measure, understand, and simulate human emotions, and even react to them. Emotion AI is also known as affective computing.

How Does Emotion AI Work?

With the help of machine learning and deep learning, software systems use speech recognition technology and images as input. This software is able to recognize a smile, for instance, and analyze it along with the tone of voice and other parameters, to interpret it as a happy or sad smile. This software can also use this information to predict whether a person’s emotion is going to affect a situation positively or negatively.

Emotion AI, however, is not just limited to recognizing and analyzing facial expression and voice. Other parameters can also be used. There is sentiment analysis, which is a natural language process (NLP) method that detects and quantifies the emotional undertones of text samples. There are also other video signals, gait analysis, and physiological signals, such as sweating. Researchers are actually working on other measures, such as heart rate and skin temperature, to determine how a subject is feeling.

Also read: The Impact of AI on Unified Communications

Emotion AI: Applications

Here are some examples of how emotion AI is applied and who can benefit from it.

Advertising

Emotion AI is very useful in advertising research. Product and service advertising uses human emotions to get through a target market. Companies base their product campaigns on what customers need and on how they feel about certain things. They then assess and evaluate the effectiveness of their advertising campaign based on how it affects customers emotionally.

It goes without saying that people’s needs and emotions are at the core of what drives them to buy things and use certain services. As emotion AI learns how to recognize these emotional drives, companies and advertising firms would be able to change marketing strategies and improve their campaigns.

But how does emotion AI gather the data it needs to identify, gauge, and analyze the emotions of customers?

One way is with smart chatbots that can identify different types of customer behavior, as well as their motives. Chatbots can also help strengthen a company’s relationship with its customers in the long term by providing them personalized product recommendations or by individually addressing their questions and concerns.

There are also smart CCTV cameras. Retail stores, outlets, and service centers can install these cameras and record their customers’ reactions to things like product packaging, prices, menus and service offerings, and even the physical presentation of their store in real time. Emotion AI will then analyze the videos using facial and speech recognition. A store will be able improve their range of products or services, their pricing, and other aspects of their business based on these analyses.

Cameras can also be integrated in selected customers’ smartphones, computers, and smart TVs. These cameras can make it possible for companies to leverage emotion AI to test customers’ reactions to a particular online content. Brands can then strategize on their online presence.

Call centers and customer service

Other than advertising, emotion AI is also used by call centers. Using voice analytics software, call centers are able to identify the mood of their customers or callers so agents can adjust how they handle the conversation.

Mental health

Emotion AI is also helpful for mental health organizations and workers. Mental health monitoring applications can listen to people who are speaking into their phone and use voice analysis to gain insight in their mood or uncover signs of anxiety. They can also come up with an algorithm that gathers phone data to predict the varying degrees of a person’s depression.

Automotive

Emotion AI can be put into vehicles, especially connected cars, to detect distractions, including a driver’s state of mind. Emotion AI can, for one, detect if a driver is having an argument with the passenger next to him or her, or over the phone (via Bluetooth), or if the driver is tired and sleepy. The software can either read the driver’s blood pressure or tone of voice, and if it detects anger, exhaustion, or distress, it will adjust the car’s speed or maneuver the car to slowly stop at the side of the road.

Challenges Facing Emotion AI

A lot of people recognize the risks of using emotion AI and question its accuracy. Human emotions, after all, can be so complex and inherently hard to read. Sometimes, people actually feel differently from what they claim to be feeling. So how can an algorithm detect, identify, and analyze emotions when humans are not exactly experts at it?

Neuroscience researcher and Immersion Neuroscience CEO Paul Zak says that while people may lie about how they feel, their brains don’t. Science can measure the changes in a person’s oxytocin levels, which is the neural signature of emotional resonance in the brain.

Additionally, emotions are subjective, so emotion AI can be prone to bias. For example, negative emotions can be more associated with people of certain ethnicities, which can have harmful ramifications.

As such, amidst the growing concern about the effectiveness, the risks, the reliability, and the use and misuse of emotion AI in the scientific community, calls for ethical regulations continue to grow. There needs to be rigorous auditing of AI technologies that are used to read human emotions.

Read next: The Future of Network Management with AIOps