Editor’s Note: Occasionally, Enterprise Networking Planet is proud to run guest posts from authors in the field. Today, Rob Marson of JDSU explores options for optimizing the performance of applications hosted on private clouds but delivered over public WANs.

Sometimes clouds mean storms. The enterprise cloud is no different.

Cloud-hosted applications create a number of challenges for network administrators, especially when they run over a public wide area network, as most do. Today’s delivery chain for applications is somewhat decoupled, with the WAN carrying data from enterprises to apps housed in offsite data centers outside of the enterprises’ purview. The public WAN’s performance is absolutely crucial to application delivery, but the public WAN doesn’t provide much visibility into those applications.

Further compounding the challenges, even small changes in the network can magnify application performance problems. And poor network performance is more than just a nuisance for business-critical applications like video, VoIP, unified communications and virtual desktops, all of which are creeping ever more into the cloud.

How do you detect and resolve issues in the network if it’s not actually your network?

The WAN Plays a Critical Role in Application Delivery

Packet loss within a WAN can occur for many reasons, including network congestion or protection events such as route reconvergence and network misconfigurations. Furthermore, most WANs are oversubscribed and leverage network statistics to maximize available capacity. Data traffic is unpredictable, so wide area networking equipment, such as switches, routers and gateways, can react to network congestion by selectively discarding certain packets.

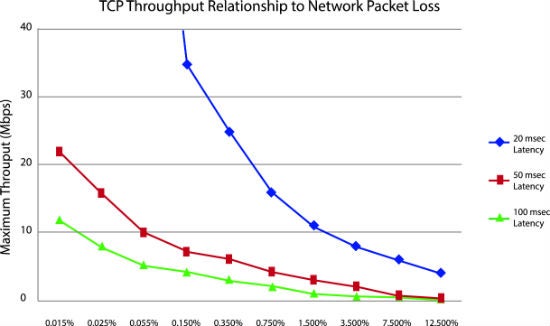

And don’t be fooled by low packet loss numbers. Even in the 1 to 2 percent range, packet loss’s effects on application performance are often disproportionately large, causing effects ranging from jitter on a phone call to the crash of a virtual application. Packet loss often equals application throughput decrease or outright failure. It’s further compounded by a significant increase in latency.

Getting ahead of the curve is critical. Administrators must be able to pinpoint the location and source of latency and determine beforehand what the implications to specific applications could be.

The chart below shows the effect of packet loss and application latency for applications using TCP/IP.

The Situation Today

Full network visibility and understanding of the innards of an application require more than simply keeping an eye on bandwidth consumption per protocol. HTTP traffic has very diverse levels of business priority. Without sufficient application-level visibility, it’s difficult for enterprises to make sure their available network resources are, for example, bolstering ROI sufficiently.

But today there exists no simple way to measure user-application response times end-to-end throughout the entire service delivery network, since the network involves both proprietary and external networks. In order to understand and manage user experiences, isolate the sources of performance impairments, and make the necessary adjustments to network settings and policies in real time, enterprises need to employ new strategies.

Distributed applications require distributed data-capture strategies in order to be able to isolate issues. Although an increasing number of network devices provide embedded traffic classification and monitoring capabilities, the implementations often result in performance-impacting consequences, cost more, and often lack the ability to look beyond packet headers.

An effective solution must look deep into the content within the applications. The growing mobile-user presence and the increased complexity of network environments means that users typically pick up IP addresses dynamically. For this reason, a user could have several IP addresses during a single session. This evolution of the network makes it nearly impossible to monitor, secure, and manage solely by IP address.

Requirements for New WAN Monitoring Solutions

A truly network-wide monitoring solution, extending to remote branch offices, must provide access to key performance information, including

- Measurement and analysis of application performance for all transactions

- Comparisons of response times against intelligent baselines and thresholds

- Identification of abnormal latencies in the network

- Isolation of a problem to a specific link or application server, or the application itself

- Delivery of alerts on any performance deterioration

An effective monitoring solution must also provide a scalable approach to pervasive monitoring, management, and troubleshooting, with an architecture that decouples data collection from management, aggregation, and analysis. This approach allows a highly scalable, distributed, cost-effective method to add visibility throughout the network, reducing the complexity of capturing rich intelligence from the network, the content, and the end-user experience.

Outsourcing WAN Monitoring and Management

Unfortunately, many enterprises lack the funds to deploy a sophisticated, ubiquitous WAN monitoring strategy to each remote branch office. Others lack the in-house expertise. That’s where outsourcing comes in. Providing enhanced WAN traffic visibility creates opportunities for service providers and options for enterprise IT administrators.

It’s a win-win for service providers and enterprises. The enterprise gets better network visibility and response to network issues. The service provider becomes a more trusted and valuable resource for the enterprise, rather than a simple middleman between application and end user.

Outsourcing eliminates hardware maintenance and support staff costs associated with on-premises technology. The managed service provider can also take advantage of economies of scale to use the most sophisticated WAN monitoring and management products on the market and employ people with the expertise to more effectively assess the data collected. To deliver these types of services, network operators need to have visibility into their network’s capacity use, application performance, and bottleneck locations.

Service providers must be able to quickly isolate the sources of issues, indemnify their services should problems arise, and provide the information enterprise IT administrators require about network performance. Some questions enterprises should consider when assessing managed network services include:

- Does the service provider possess the necessary technical and business expertise to adapt to the enterprise’s evolving needs?

- Are the provider’s services customizable to the enterprise’s specific needs?

- Does the service provider offer a real-time network view?

- Is application and/or network performance backed with specific service level agreements?

The Value of Effective Monitoring

Industry studies show that just a half-second delay in generating search results worsens the user experience and, in effect, sheds a significant portion of a company website’s traffic. Poor business-critical application performance can also hinder productivity and damage reputations and relationships. Clearly, proactive and real-time monitoring to improve the quality of the end-user experience offers huge benefits.

With effective monitoring, those dark stormy enterprise clouds will begin to lighten.