Image: VectorCookies/Adobe Stock

Optical networking is a technology that uses light to transmit data rapidly between devices. Discover how it’s used in today’s world.

Optical networking is a technology that uses light signals to transmit data through fiber-optic cables. It encompasses a system of components, including optical transmitters, optical amplifiers, and fiber-optic infrastructure to facilitate high-speed communication over long distances.

This technology supports the transmission of large amounts of data with high bandwidth, enabling faster and more efficient communication compared to traditional copper-based networks.

The main components of optical networking include fiber optic cables, optical transmitters, optical amplifiers, optical receivers, transceivers, wavelength division multiplexing (WDM), optical switches and routers, optical cross-connects (OXCs), and optical add-drop multiplexers (OADMs).

Fiber optic cables are a type of high-capacity transmission medium with glass or plastic strands known as optical fibers.

These fibers carry light signals over long distances with minimal signal loss and high data transfer rates. A cladding material surrounds the core of each fiber, reflecting the light signals back into the core for efficient transmission.

Fiber optic cables are widely used in telecommunications and networking applications due to immunity to electromagnetic interference and reduced signal attenuation compared to traditional copper cables.

Optical transmitters convert electrical signals into optical signals for transmission over fiber optic cables. Their primary function is to modulate a light source, usually a laser diode or light-emitting diode (LED), in response to electrical signals representing data.

Strategically placed along the optical fiber network, optical amplifiers boost the optical signals to maintain signal strength over extended distances. This component compensates for signal attenuation and allows the distance signals to travel without expensive and complex optical-to-electrical signal conversion.

The primary types of optical amplifiers include:

At the reception end of the optical link, optical receivers transform incoming optical signals back into electrical signals.

Transceivers, short for transmitter-receiver, are multifunctional devices that combine the functionalities of both optical transmitters and receivers into a single unit, facilitating bidirectional communication over optical fiber links. They turn electrical signals into optical signals for transmission, and convert received optical signals back into electrical signals.

Wavelength division multiplexing (WDM) allows the simultaneous transmission of multiple data streams over a single optical fiber. The fundamental principle of WDM is to use different wavelengths of light to carry independent data signals, supporting increased data capacity and effective utilization of the optical spectrum.

WDM is widely used in long-haul and metro optical networks, providing a scalable and cost-effective solution for meeting the rising demand for high-speed and high-capacity data transmission.

Optical add-drop multiplexers (OADMs) are major components in WDM optical networks, offering the capability to selectively add (inject) or drop (extract) specific wavelengths of light signals at network nodes. OADMs help refine the data flow within the network.

Both optical switches and routers contribute to the development of advanced optical networks with solutions for high-capacity, low-latency, and scalable communication systems that can meet the changing demands of modern data transmission.

Optical cross-connects (OXCs) enable the reconfiguration of optical connections by selectively routing signals from input fibers to desired output fibers. By streamlining wavelength-specific routing and rapid reconfiguration, OXCs contribute to the flexibility and low-latency characteristics of advanced optical communication systems.

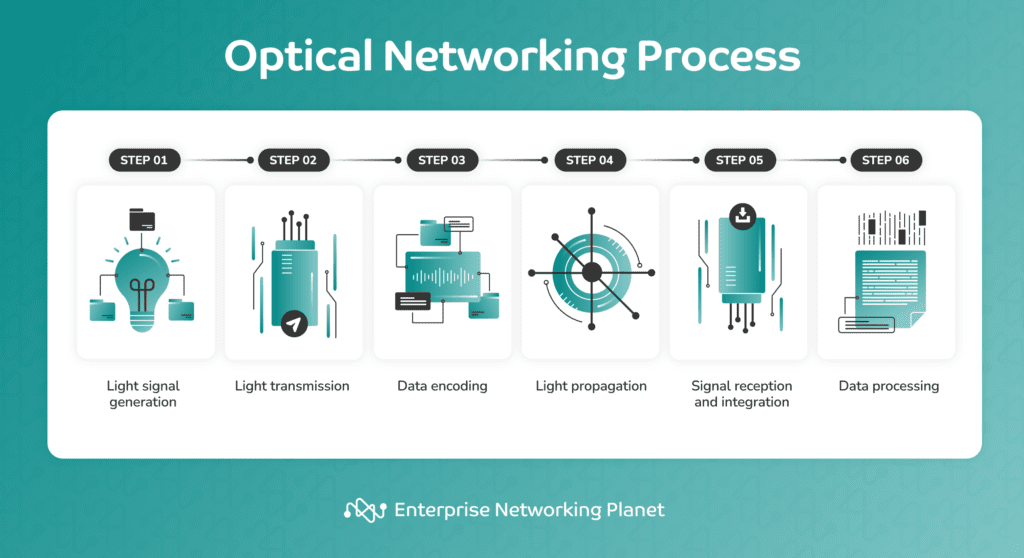

Optical networking functions by harnessing light signals to transmit data through fiber-optic cables, creating a rapid communication framework. The process involves light signal generation, light transmission, data encoding, light propagation, signal reception and integration, and data processing.

The optical networking process begins by converting data into light pulses. This conversion is typically achieved using laser sources to secure the successful representation of information.

The system sends light pulses carrying data through a fiber optic cable during this phase. The light travels within the cable’s core, bouncing off the surrounding cladding layer due to total internal reflection. This lets the light travel great distances with minimal loss.

Data is then encoded onto the light pulses, introducing variations in either the light’s intensity or wavelength. This process is tailored to meet the needs of business applications, ensuring a seamless integration into the optical networking framework.

The light pulses propagate through the fiber-optic cables, delivering high-speed and reliable connectivity within the network. This results in the swift and secure transmission of important information between different locations.

At the receiving end of the network, photosensitive devices, like photodiodes, detect the incoming light signals. The photodiodes then convert these light pulses back into electrical signals, improving optical networking integration.

The electrical signals undergo further processing and interpretation by electronic devices. This stage includes decoding, error correction, and other operations necessary to guarantee the data transmission accuracy. The processed data is used for various operations, supporting key functions, such as communication, collaboration, and data-driven decision-making.

There are many different types of optical networks serving diverse purposes. The most commonly used ones are mesh networks, passive optical network (PON), free-space optical communication networks (FSO), wavelength division multiplexing (WDM) networks, synchronous optical networking (SONET) and synchronous digital hierarchy (SDH), optical transport network (OTN), fiber to the home (FTTH)/fiber to the premises (FTTP), and optical cross-connect (OXC).

Optical mesh networks interconnect nodes through multiple fiber links. This provides redundancy and allows for dynamic rerouting of traffic in case of link failures, enhancing the network’s reliability.

PON is a fiber-optic network architecture that brings optical cabling and signals to the end user. It uses unpowered optical splitters to distribute signals to multiple users, making it passive.

FSO uses free space to transmit optical signals between two points.

WDM uses different wavelengths of light for each signal, allowing for increased data capacity. Sub-types of WDM include coarse wavelength division multiplexing (CWDM) and dense wavelength division multiplexing (DWDM).

SONET and SDH are standardized protocols for transmitting large amounts of data over long distances using fiber-optic cables. North America more commonly uses SONET, while international industries use SDH.

OTN transports digital signals in the optical layer of communication networks. It comes with functions like error detection, performance monitoring, and fault management features.

FTTH and FTTP refer to the deployment of optical fiber directly to residential or business premises, providing high-speed internet access.

OXC facilitates the switching of optical signals without converting them to electrical signals.

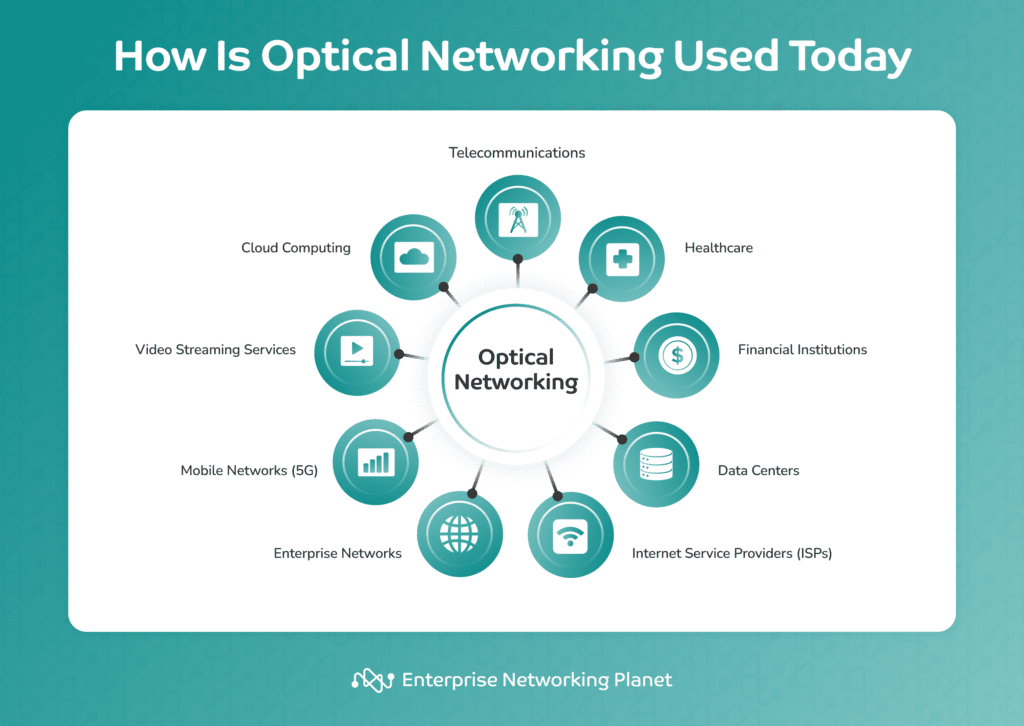

Various industries and domains today use optical networking for high-speed and efficient data transmission. These include telecommunications, healthcare, financial organizations, data centers, internet service providers (ISPs), enterprise networks, 5G networks, video streaming services, and cloud computing.

Optical networking is the foundation of phone and internet systems. Today, optical networking remains pivotal in telecommunications, connecting cell sites, ensuring high availability through dynamic traffic rerouting, and enabling high-speed broadband in metropolitan areas and long-distance networks.

For healthcare, optical networking guarantees rapid and secure transmission of medical data, expediting remote diagnostics and telemedicine services.

Financial organizations use this technology for fast and safe data transmission, which is indispensable for activities like high-frequency trading and connecting branches seamlessly.

Optical networking in data centers links servers and storage units, offering a high-bandwidth and low-latency infrastructure for reliable data communication.

Internet service providers (ISPs) employ optical networking to offer broadband services, using fiber-optic connections for quicker internet access.

Large businesses use internal optical networking to connect offices and data centers, maintaining high-speed and scalable communication within their infrastructure.

For 5G mobile networks, optical networking allows for increased data rates and low-latency requirements. Fiber-optic connections link 5G cell sites to the core network, bringing bandwidth for diverse applications.

Optical networks enable smooth data transmission to deliver high-quality video content via streaming platforms for a more positive viewing experience.

Cloud service providers rely on optical networking to interconnect data centers to give scalable and high-performance cloud-based services.

The collaborative efforts of several optical networking companies and distinguished individuals have significantly shaped the optical networking landscape as we know it today.

Trends in optical networking, such as 5G integration, elastic optical networks, optical network security, interconnects in data centers, and green networking highlight the ongoing evolution of the technology to meet the demands of new technologies and applications.

Optical networking enables the necessary high-speed, low-latency connections to handle the data demands of 5G applications. 5G integration makes sure that you get fast and reliable connectivity for activities such as streaming, gaming, and emerging technologies like augmented reality (AR) and virtual reality (VR).

Ongoing advancements in coherent optics technology contribute to higher data rates, longer transmission distances, and increased capacity over optical networks. This is vital for accommodating the growing volume of data traffic and supporting applications that need high bandwidth.

Integration of optical networking with edge computing reduces latency and elevates the performance of applications and services that call for real-time processing. This is imperative for apps and services needing real-time responsiveness, such as autonomous vehicles, remote medical procedures, and industrial automation.

Adopting SDN and NFV in optical networking leads to better flexibility, scalability, and effective resource use. This lets operators dynamically allocate resources, optimize network performance, and respond quickly to changing demands, improving overall network efficiency.

Elastic optical networks allow for dynamic adjustments to the spectrum and capacity of optical channels based on traffic demands. This promotes optimal resource use and minimizes the risk of congestion during peak usage periods.

Focusing on bolstering the security of optical networks, including encryption techniques, is important for protecting sensitive data and communications. As cyberthreats become more sophisticated, safeguarding your networks becomes paramount, especially when transmitting sensitive information.

The growing demand for high-speed optical interconnects in data centers is driven by the requirements of cloud computing, big data processing, and artificial intelligence applications. Optical interconnects have the bandwidth to handle large volumes of data within data center environments.

Efforts to make optical networks more energy-efficient and environmentally-friendly align with broader sustainability goals. Green networking practices play a key role in decreasing the environmental impact of telecommunications infrastructure, making it more sustainable in the long run.

The progression of optical networking has been instrumental in shaping the history of computer networking. As the need for faster data transmission methods grew with the development of computer networks, optical networking provided a solution. By using light for data transmission, this technology enabled the creation of high-speed networks that we use today.

As it grows, optical networking is doing more than just providing faster internet speeds. Optical network security, for instance, can defend your organization against emerging cyberthreats, while trends like green networking can make your telecommunication infrastructure more sustainable over time.

Read our guide on top optical networking companies and get to know the leading optical networking solutions you can consider for your business.

Liz Laurente-Ticong is a tech specialist and multi-niche writer with a decade of experience covering software and technology topics and news. Her work has appeared in TechnologyAdvice.com as well as ghostwritten for a variety of international clients. When not writing, you can find Liz reading and watching historical and investigative documentaries. She is based in the Philippines.

Enterprise Networking Planet aims to educate and assist IT administrators in building strong network infrastructures for their enterprise companies. Enterprise Networking Planet contributors write about relevant and useful topics on the cutting edge of enterprise networking based on years of personal experience in the field.

Property of TechnologyAdvice. © 2025 TechnologyAdvice. All Rights Reserved

Advertiser Disclosure: Some of the products that appear on this site are from companies from which TechnologyAdvice receives compensation. This compensation may impact how and where products appear on this site including, for example, the order in which they appear. TechnologyAdvice does not include all companies or all types of products available in the marketplace.